DOCENT

Learning Self-Supervised Entity Representations from Large Document Collections

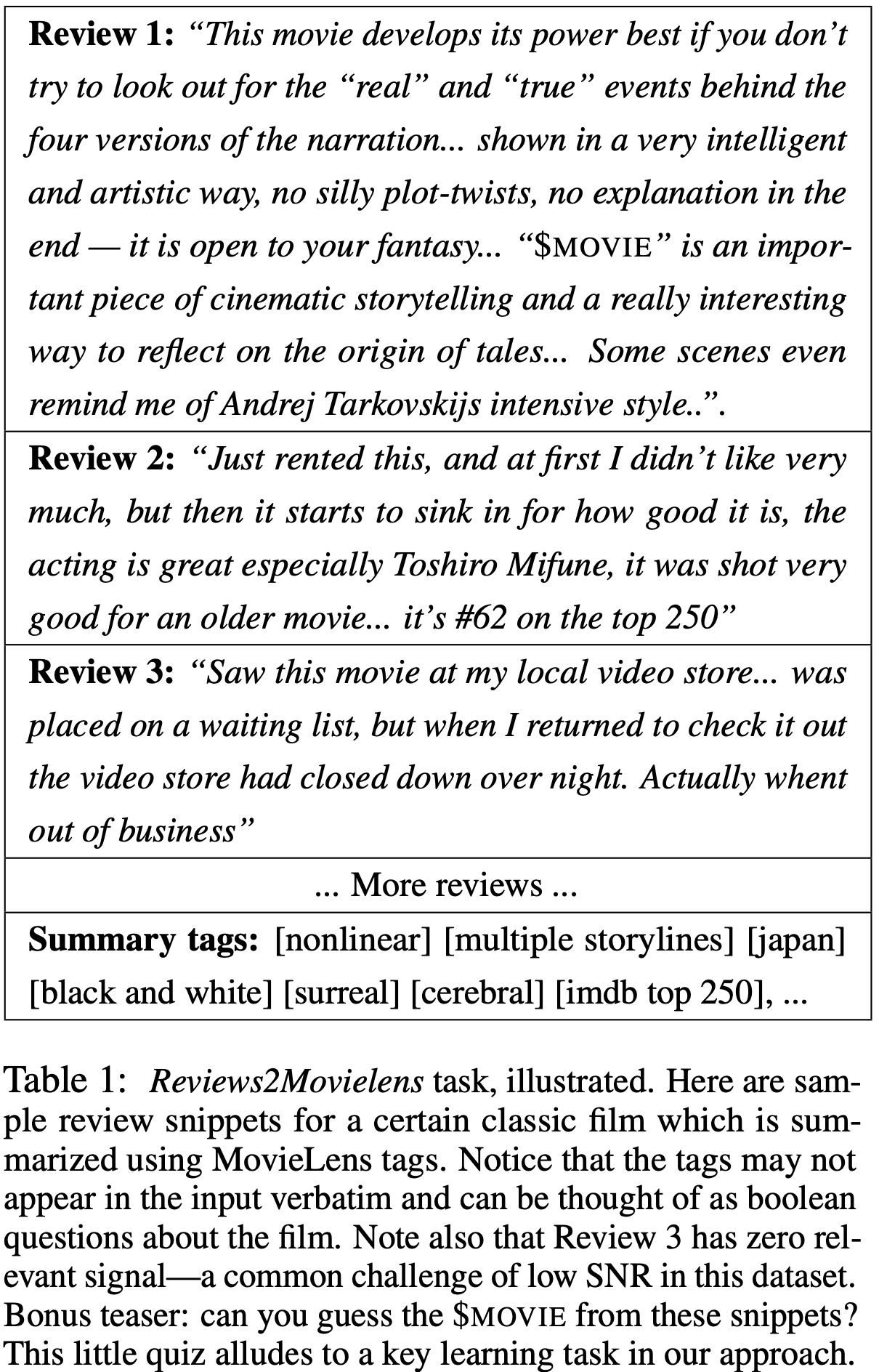

The website contains additional data and scripts for the EACL-2021 paper DOCENT: Learning Self-Supervised Entity Representations from Large Document Collections (arxiv)

Please, see https://goo.gle/research-docent for the Reviews2MovieLens dataset and modeling code.

Datasets

Reddit Movie Suggestion

The dataset contains a collection of 4765 movie-seeking queries and corresponding recommendations, collectively curated and voted on by the Reddit Movie Suggestions community. Worth noting are (a) the conversational, human-to-human language of the queries; (b) the community-recommended movies that, while sparse and possibly biased, can be used as a source of ground truth. While modest in size, the dataset is well-suited to evaluate zero-shot performance on the movie ranking task defined in Section 3.3.

A sample request is below.

{

"request": {

"query_original": "Unreliable Narrator <p>Any suggestions for movies containing unreliable narrators? (Characters version of events is false through mental illness, narcissism, naivety etc) </p>\n",

"query_full": "Unreliable Narrator Any suggestions for movies containing unreliable narrators? (Characters version of events is false through mental illness, narcissism, naivety etc) ",

"query_title": "Unreliable Narrator"

},

"response": {

"result": [

{

"score": 16.0,

"asin": "B00003CWQ2"

},

{

"score": 16.0,

"asin": "B000JMK6LW"

},

{

"score": 16.0,

"asin": "B00003CXZ3"

},

]

}

}

score corresponds to how many upvotes the answer has received.

Scripts

Closed Vocabulary Tag Prediction

In this scenario, evaluation is done on a holdout set of 1000 movies.

See evaluation script here or

Open Vocabulary Tag Prediction

This task is evaluated by withholding parts of the tag vocabulary (500 tags and 6392 movies) so that those tags are never seen in training.

See evaluation script here or